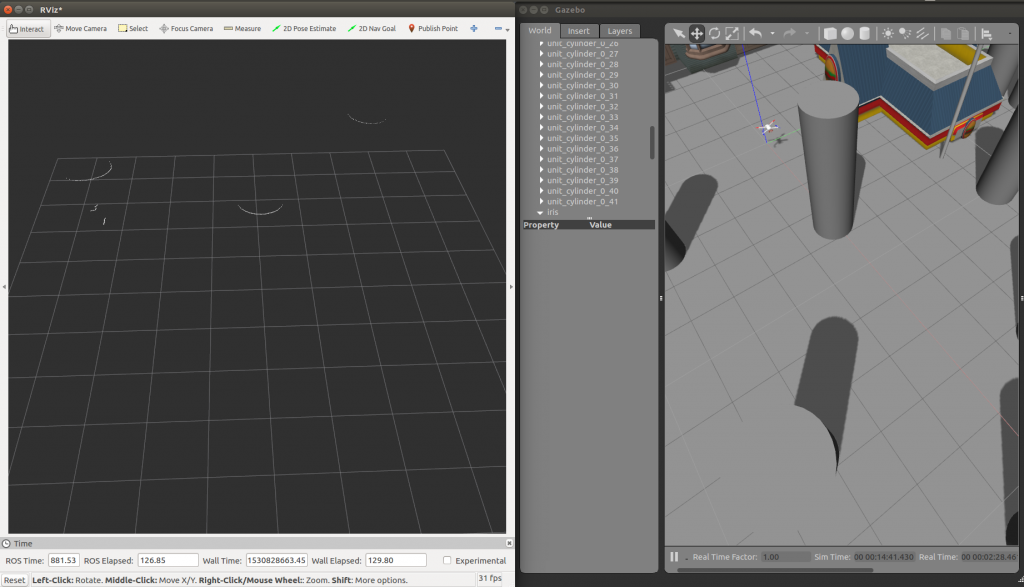

Reinforcement Learning for Obstacle Avoidance

Students: Shakeeb Ahmad

The aim of this project is to exploit Deep Q-Learning with the trajectory generation algorithm developed at the MARHES Lab for vision-aided quadrotor navigation. Certain motion primitives in three directions are computed prior to the flight and are executed online. A simulated 2-D laser scan is used as a set of raw features which are further processed.

Epsilon-greedy policy is used to maintain a balance between exploration and exploitation. The Q-values are recursively updated based on the Bellman equation to calculate the error in the neural network which is then back-propagated to train the network. Keras library in Python is used for training the network and predicting desired actions. The Python node is also used to subscribe to and process the laser scan features. However, the front end C++ node is used to detect collisions and to execute the trajectories if they are collision-free. In this way Python script/node, exploiting the Keras library is used along with the pre-designed collision detection C++ node using service-client architecture (thanks to ROS!). The approach used in this project ensures learning while the robot undergoes collision-free exploration. The research is still in progress. The preliminary results are shown.