Students: Riley McCarthy, Giovanni Cordova, Isaiah Garcia-Romaine, Daniel McArthur, Emely Seheon, Tristan Thomas

Senior Contributors: Dr. Rafael Fierro

Overview:

The MARHES Lab at the University of New Mexico continues to break new ground in aerial robotics with two groundbreaking actuation projects: the Fully-Actuated Hexarotor and the Omni-Directional Multirotor (Omnicopter). These vehicles, developed as part of Riley McCarthy’s Master’s thesis and supported by both Sandia National Laboratories and the Air Force Research Laboratory, are engineered to transform multirotors from passive inspection tools into platforms capable of active interaction with their environment.

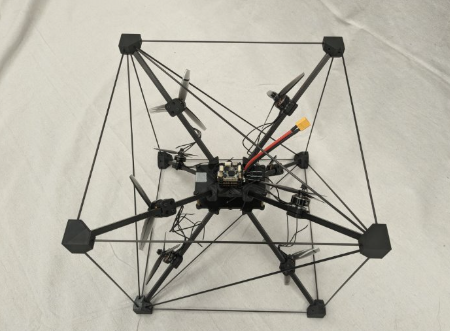

Fully-Actuated Hexarotor

Designed to overcome the limitations of traditional co-planar multirotor drones, the fully-actuated hexarotor uses six strategically oriented propellers to decouple translational and rotational motion—unlocking six degrees of freedom (6 DOF). This allows it to hover, pitch, roll, and translate independently, making it ideal for complex manipulation tasks.

Hardware & Build:

- Based on a modified Tarot TL65B01 carbon fiber frame

- Equipped with tri-blade 9-inch propellers and 2806.5-1460KV motors

- Integrated with an Odroid-XU4 companion computer and a Rokubi Mini force/torque sensor

- Controlled via PX4 flight stack and ROS2 Foxy middleware

Testing & Control:

- Controlled using a custom PID controller for position and PX4’s built-in attitude controller

- Executed trajectory tracking tasks: hovering with pitch/roll offsets, figure-eight paths, and level translation

- Showcased force-feedback manipulation via a hybrid position/force controller, enabling wall contact, sustained pushing, and motion during contact using real-time feedback from the Rokubi sensor

Omni-Directional Multirotor (Omnicopter)

Expanding upon the hexarotor’s capabilities, the omnicopter introduces true omni-directionality—it can thrust in any 3D direction, regardless of its orientation. Built for extreme aerial flexibility, this design is well-suited for emulating spacecraft dynamics and next-generation maneuvering.

Hardware & Control:

- Features a symmetric design and fixed, non-planar propellers

- Uses PX4’s updated control allocation for managing complex thrust vectors

- Inputs calculated using the Moore-Penrose pseudo-inverse method for dynamic allocation

- Tested extensively in Gazebo simulation and real-world flight using the Vicon system

Experimental Achievements:

- Hovering while rotating 360° about multiple axes

- Translational motion while spinning in arbitrary orientations

- Demonstrated thrust envelope coverage across 3D space

Both of these projects represent major advancements in the control and design of aerial robotic systems. By equipping drones with true six-degree freedom and hybrid force control, the MARHES Lab is opening the door to a future where aerial systems can manipulate objects, interact with surfaces, and perform complex real-world tasks previously reserved for grounded robots.